Homelab Update: Local RAG Setup Complete!

I recently finished setting up the basic configuration for my homelab (I finally bought a case 😅), and it's been a great hands-on experiment!

Here's what I've built so far:

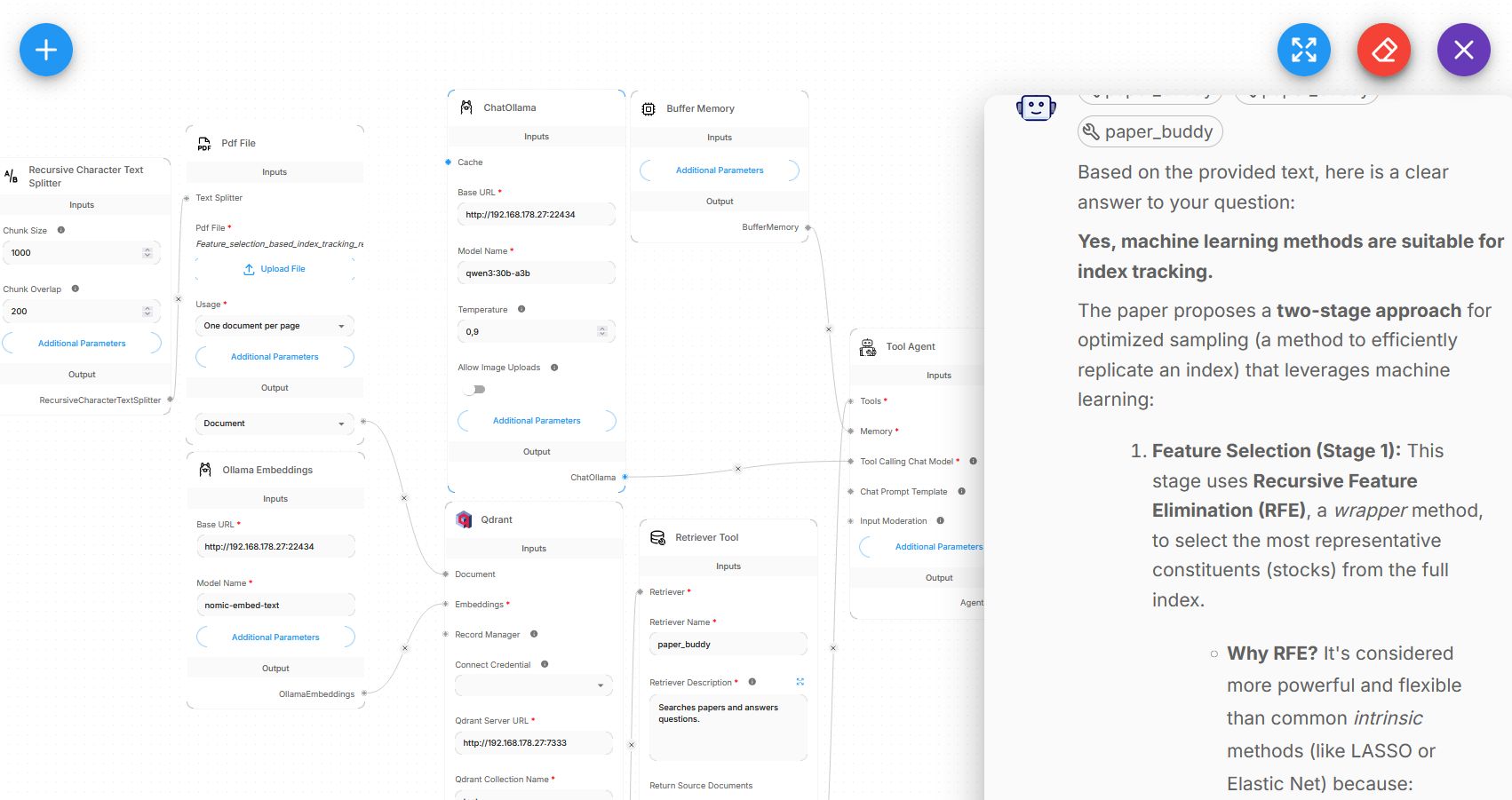

- 🧠 Ollama running local LLMs like qwen3:30b-a3b, which runs perfectly on CPU only!

- 📦 Qdrant as my vector database

- 🔗 I integrated everything using Flowise - I've been particularly interested in exploring no/low-code AI tools for building and managing LLM pipelines visually.

Have a look at the workflow

The goal: an entirely local RAG (Retrieval-Augmented Generation) setup.

As a test, I uploaded a research paper (in PDF format) and was able to chat with the agent about its content. It's great to see how well this works with so little effort. 💪